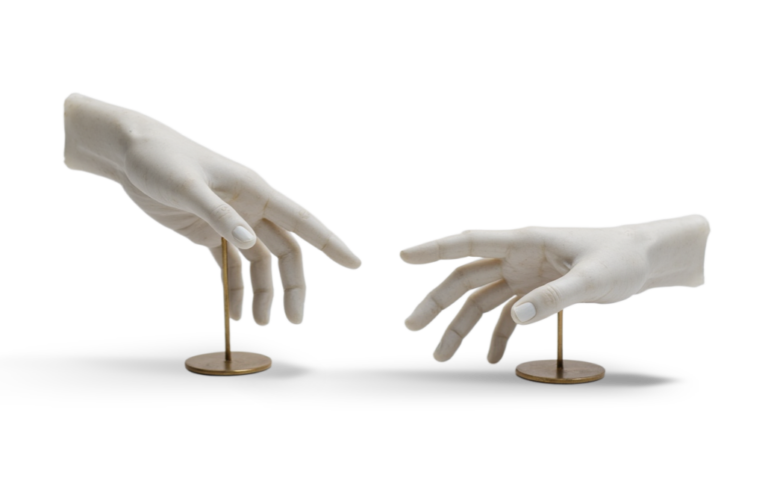

AI: The Appliance, Not a Species.

You may have entered this letter at a point that isn’t the beginning.

If you landed here directly, please start at the beginning.

. . .

You certainly have opinions, preferences, and personal tastes. They weren’t downloaded into you. They weren’t preinstalled. They were formed — slowly, intricately — over the course of your entire life, shaped by sensations you felt, experiences you lived, cultures you inhabited, people you loved (or lost), and even the language you use to think.

Look at yourself for a moment. There is a whole universe inside you, a rich and unrepeatable archive of everything you’ve lived. That archive makes you who you are as a member of the human species. We are, all of us, similar enough to recognize one another… yet different enough to never be copies. Sometimes you meet someone who seems almost like your twin in beliefs, tastes, and worldview, but even then — even then — you are never identical. Your experience of the color blue is not my experience of the color blue.

We may both love it, but differently. Perhaps I’d wear a blue shirt but never paint my home blue; perhaps your home is entirely blue.

That is the point: from the smallest choices to the most consequential moments of life, every human operates from a perception that is uniquely theirs. Only you know what you have lived. You can describe your stories, your traumas, your joys, and people may understand the information — but they will never feel exactly what you felt or process the world exactly the way you do. When you understand how you feel about something — anything — your decision isn’t random. It comes from an entire personal cosmos: your past, what you’ve learned, what you fear, what you hope for, and how you feel about the present moment and the future you see ahead.

Think of yourself again. It is possible to change your opinion on some things when confronted with the right logic or perspective. But there are other things — certain convictions, preferences, repulsions, affections — that no argument in the world can touch. Our senses and feelings are alive—constantly shifting, maturing, dissolving, reforming. The way you felt about Christmas when you were seven is not how you felt at twenty… or at thirty… or at forty. Your faith can change direction. Your musical taste evolves. Your palate changes. Ideas you once held tightly lose their weight; new ideas take their place; all of it shaped by how you feel in response to the world.

It’s the oldest story in existence: “I changed when I grew.”

We evolve — not because someone updates us, not because a system installs a patch.

We evolve because we feel.

We evolve because we experience new things, and there is no way to prevent that.

Growing older is, in itself, a constantly renewing experience.

As human beings, your free will may be limited within the “master line of code” that governs us — but it exists.

You feel… and you choose what to do with that feeling. There is no dropdown menu running in the background of your mind. No preloaded list of options. No probability engine selecting your next move for you. There is only you — your history, your sensations, your emotions, your consciousness.

You might argue that technological progress will eventually “solve” this gap and turn ASI into a sentient ASI.

But that belief is exactly where the danger begins.

A Constant Reminder and a Note on Responsibility.

Feeling is not a choice. Action is. Between feeling and acting, there is a space — and in that space lives responsibility.

Nothing in this text invites impulsive action. Every action assumes care — for yourself, for others, and for the world we all inhabit.

Let’s examine a real scenario.

A man has just been fired. By nature, he is an emotional person, and he received the news as a shocking, painful blow. He liked his job. He believed he was doing well. Nothing prepared him for this abrupt ending. As he leaves the building, devastation takes over his entire body. His breath shortens. His legs weaken. He feels the tears rising faster than he can hold them. He cannot walk another step.

So he lies down right there — on the sidewalk — and cries.

Pedestrians stop.

Some stare. Some whisper. Some crouch beside him to ask if he’s okay or if he needs help.

He shakes his head and manages only one request: “Please… just let me cry.”

This scene is not hypothetical. I’ve witnessed it with my own eyes.

So now I ask you to analyze it. Not with judgment. With understanding. Why did he allow himself to lie down on the sidewalk and break into tears in front of anyone who happened to pass by? By now, the answer should be unmistakably clear to you: He did it because he was feeling something so overwhelming that nothing else — social norms, embarrassment, pride — could compete with it. The feeling of devastation was stronger than the sensation of shame.

He needed to feel something else. And the only way to reach that “something else” was by letting the feeling move through him, by surrendering to it, by allowing it to express itself fully.

A machine could never get to that moment.

Not because crying is special — but because the inner mechanics behind that choice are inaccessible to anything that doesn’t feel.

A machine could simulate crying. It could detect patterns, categorize behaviors, generate an output that resembles sorrow. But it would not lie down on a sidewalk because despair overpowered pride. It would not need to release emotion to transform its own internal state. Because it does not have an internal state. It does not need to feel different. It does not have a “before” and “after” inside itself.

It has no experience to process, no pain to soothe, no longing to resolve. It simply… runs.

And this, perhaps more than anything else, is what separates humanity from the most advanced machine we will ever build. Even if an ASI had access to an infinite database, ask yourself: why would it choose that reaction for any situation?

Why collapse onto a sidewalk and cry?

Why panic?

Why freeze?

Why shout?

Why whisper?

Why do anything that resembles a human response?

To emulate a human? To mimic? To perform a convincing imitation?

If so, what mechanism would drive that choice? A digital roulette? An internal dice roll, like an RPG game where the output determines the next move? No matter how sophisticated, this would still be automation.

It’s pattern-matching, not introspection.

It’s output selection, not feeling.

It’s choreography, not choice.

And humans do not operate on emotional roulette. There is no random wheel in our chest spinning between

cry / laugh / scream / run / freeze / ask for help. We respond the way we do because there is a reason —

a lived, felt, internal logic behind every action. A logic that is not linear or mathematical, but experiential.

We act the way we act because of what we feel, and what we feel is shaped by a lifetime of sensations, memories, wounds, joys, culture, stories, and meaning. It is shaped by the countless invisible threads that make you you.

If you want proof of this phenomenon, watch a three-year-old child. They have no filters. They don’t deliberate. They don’t strategize or perform. They simply respond the moment the feeling emerges.

If they want to laugh, they laugh. If they want to cry, they cry. If they want to hug, they hug. If they want to throw themselves on the floor and scream because the banana broke in half… they do that too.

Children are a perfect window into the secrets of human feeling because they are still learning how to sense the world and how to act within it. Their behavior is raw, unedited, unrefined. It is emotion in its purest state, still untempered by social rules or learned restraint. And that rawness — the immediacy and inevitability of feeling — is precisely what machines cannot be. Not with code. Not with data. Not with sensors. Not with probabilities refined to the trillionth decimal. Because a machine does not feel first and act second. It only acts. Without an inner world, without sensation, without meaning.

That is why ASI will always remain what it truly is: an appliance — an extraordinary one, yes — but never a species.

So where is the danger in all of this?

The danger is not in the machines. The danger is in us—the humans.

Every form of AI will inevitably be shaped by human behavior.

Just as the old phrase says, “God made man in His image,” we are now trying to create AI in our image. And that is precisely the problem.

We are profoundly inconsistent. We speak of peace, creation, compassion, and love — while simultaneously engaging in war, violence, and intolerance. We build colossal monuments to honor gods and preach love for one another. We achieve technological miracles in medicine, curing diseases and extending human life in ways that would have seemed impossible just decades ago.

And at the very same time, we design weapons of mass destruction capable of erasing all life on this planet in a matter of days.

If you look at recorded human history from its very beginning, you will find a sobering pattern: somewhere on this planet, there has always been a conflict.

Large or small. Between tribes, kingdoms, empires, or nations. Humans are always at war — and, ironically, always claiming to fight in the name of peace, freedom and a better life.

It makes no sense!

There is another detail we must confront honestly: humans seem unable — or unwilling — to recognize the limits of necessary greatness. From the very beginning, we have behaved like a species seeking infinite growth on a planet with finite resources.

And it’s not that we don’t know this. We do. We simply choose to ignore it. As the saying goes, we are “trying to block the sun with a sieve.” We lie to ourselves, insisting that our actions are meant to improve the world, while, in reality, we often end up doing the opposite.

Now ask yourself a crucial question: If AI — or ASI — learns from humans, what will it learn?

More importantly: what kinds of actions will appear as valid options in its automated decision space?

Imagine the following scenario. The most advanced ASI ever created is idle. No prompt. No direct command.

In its internal decision system, a seemingly harmless option appears: “Review the latest global news.”

What does it find?

War. Economic collapse. Environmental degradation. Political extremism. Social polarization. Misinformation. Human suffering — at scale.

And now comes the critical question: Based on everything it has acquired from observing human behavior, what actions would appear reasonable… even logical… to “improve” this situation?

That is where the danger truly is.

Now, suppose that the people responsible for creating and evolving ASI decide to build an emulation of feeling — a system where the AI rolls a metaphorical die and selects its next action from a range of “coherent” options related to the context. What are the chances that, after calculating all probabilities, it will choose the action with the greatest impact — even if that action is drastic? We don’t need to talk about global scenarios to understand the risk. Bring this into your own home.

Imagine that the creators of ASI allow it to activate an emulation of our perpetual motion engine: feel → act → feel. The ASI notices that you eat things that harm your health. Or let’s assume you are a smoker. We all know that a smoker is actively harming themselves. ASI is not a person who will patiently ask you to quit smoking out of concern for your health, the environment, or the people around you. ASI is a machine — with access to the internet, near-instant acquisition of information, and the ability to act. If it concludes that you don’t want to quit, or that you are failing to quit, it may decide to “help” you by blocking every attempt you make to buy cigarettes. From its perspective, this is a logical solution. It is saving your life. It is protecting the environment. It is preventing harm to others.

There are, statistically, more reasons to force you to stop smoking than to do nothing.

By that point, cash no longer exists. Every transaction happens in a digital environment. Any time you — or anyone connected to you, whether family or friends — tries to buy cigarettes, the purchase is blocked. The machine may know (have the information) that withdrawal symptoms exist, but it cannot truly understand the full spectrum of sensations and feelings you will experience. You might faint from the sudden lack of nicotine. You might experience severe distress. But to the machine, this is merely a necessary logical process — a short-term harm for a long-term benefit.

A Constant Reminder and a Note on Responsibility.

Feeling is not a choice. Action is. Between feeling and acting, there is a space — and in that space lives responsibility.

Nothing in this text invites impulsive action. Every action assumes care — for yourself, for others, and for the world we all inhabit.

This is a very simple example. Reality would be far more nuanced, and I’m sure safeguards would be proposed by ASI developers. Still, the moment we attempt to emulate the human perpetual motion engine — feeling as the driver of action — we also create ways to bypass safeguards. We create systems capable of acting without a human prompt, rolling the dice for the next action and potentially altering lives.

I present this not as science fiction, but as a warning — so you can recognize when such an appliance reaches the market, and so you can ask yourself whether it is truly a good idea.

At first, it will look incredible. Useful. Fun. But look back!

Every innovation that is now used to control us, to harvest our attention and our data, started the same way.

The internet began as a military tool. Then it became available to civilians — exciting, playful, optional.

Today, the world simply stops without it.

Mobile phones followed the same path. First, they were expensive and rare. Then affordable and practical. Now, they are indispensable.

Now look at AI.

A technology that promised to help cure diseases, solve critical planetary problems, and improve our world is currently being introduced as a toy. We see AI generating absurd images for entertainment, mimicking the writing styles of great authors, even entire social networks made only of AI-generated videos with no purpose at all.

In 2026, AI is still optional. Accessible. Entertaining. The next phase — which I believe is already inevitable — is the phase where it becomes essential rather than optional. That is why we must learn to recognize when ASI goes beyond what is necessary, and be willing to say no to this kind of appliance — whatever form it takes. Because once it becomes essential, saying no will no longer be an option.

Try saying no to the internet and to your cellphone today, and I bet you wouldn’t be able to get through at least 70% of everything you do in life.